Semalt Recommendations On How To Choose Content For 2022

Create content that Google users and algorithms will love! To make it easier for you, we've prepared an overview of content marketing trends in 2022 and some useful tips that will stop creating content from being black magic.

How to create content that generates engagement? What has changed in content marketing in recent years? What practices are no longer relevant and which are still worth using? Virtual space is changing very dynamically - in order to keep up with it, as a marketer, SEO specialist, copywriter, or even an e-commerce owner, you have to really keep your finger on the pulse.

In this article, we want to tell you how to create fresh, innovative, and Google-compliant content - one that people will want… that's it. To read? To watch? Or maybe one that can be experienced? Sounds abstract? Not necessarily. Do not fall behind the competition - regularly update your knowledge and update your best practices so that content marketing in 2022 has no secrets for you.

How to choose content for 2022? Trends and the current situation

Can you plan your content as you use it by creating template schedules, posting from previous posts, and copying graphics from free stocks? One look at the operation of many brands allows you to say yes, you can… but what for? Of course, we are not talking here about the fact that proven paths are bad (after all, why reinvent the wheel?), And old content cannot be recycled (well, we wrote an entire article about it - because Recycling content is not a bad thing!), but you should always be careful not to accidentally fall out of the game. What trends does 2022 herald in content marketing? What do you need to pay attention to?

Chronic Content Fatigue, or… we have enough content

Sounds like a bad start to a content marketing article in the coming year, right? Perversely, we find that nothing could be more wrong. The fact is, internet users are fed up with secondary, low-quality content packed with links and artificial ads, ubiquitous click baits, and unexpected pop-ups. But this by no means means that "content marketing is over". The situation generates new challenges for specialists, forcing even the most popular brands to move away from traditional forms of content and go beyond the average.

What is invested in?

In 2021, videos took the first place, followed by events (including still popular internet events, e.g., webinars), and in third place, everything that is included in, for example, a company blog or newsletter, i.e. own communication channels. It is worth noting that among the expenses there are such elements of content marketing as: getting to know your target group, technologies, UX, or building a community on social media. So it is clear that content marketing in 2022 is not only copywriting!

How to choose content for 2022? 10 simple steps to successful content marketing

Be for the user, not the other way around

When creating content - including those targeted at sales - focus on solving problems that your recipient is struggling with. What motivates him / her? Why did he / she enter this and not another query on Google? What does he / she need at the moment? Use all the opportunities to meet his / her expectations one hundred percent. Why is it so important? Generating organic traffic (e.g., through SEO) is just the beginning: keeping a visitor on the page, reducing bounce rates and increasing engagement are just as important. Google has been paying more and more attention to user intentions for a long time - so should you.

Example: You run a travel agency and want to increase sales. Try to create content (article, video, news) to help users understand the current pandemic restrictions in individual countries and show them that you understand the importance of security. Hint on how they can buy their dream trip abroad - and at the same time make sure that they do not turn to the side of the competition.

Do not put keywords above the value of the text

As we wrote above, the content you create must be valuable and serve the user. Your potential client must be willing to get involved in activities related to your brand, and one of the reasons for lowering trust in a given company is often… over-optimization of the content. An excess of keywords and sticking to a rigid, more and more outdated framework do not serve to build the reputation of your brand, what is more - they are less and less welcomed by Google.

Don't get stuck in the usual patterns that don't work anymore

Regularly check what's working and what's not. Test new solutions and experiment with new products - it may turn out that the podcast will not win the crowd, but regularly published video content does. You cannot refresh the strategy from previous years and count on the fact that it will continue to bring the same results, while your competition is reaching for the latest solutions.

Create full, valuable content

The trends shaping content marketing in 2022 indicate that it is actually better to build a solid compendium of knowledge than dozens of short texts.

Does this fit with the idea that users need short, quick, and concise content? Note, however, that we are not suggesting writing only to achieve a specific word count, nor are we suggesting creating a slow, unengaging video or audio content. The goal of creating extensive content is to be able to exhaust the answer to the user's question, which will encourage them to stay on your website, as they simply won't have to look elsewhere for a solution

Tip: If you are worried that the user will get lost in long texts or complex videos, remember that you can make navigation easier for them, e.g., by creating a convenient table of contents.

Take care of a good user experience

This is not just about UX, although of course, the usability of websites is not unimportant in this case. More generally, from a content marketing perspective, the whole experience of clicking on a link to your website in search results, advertising your product or posting on social media is important.

One of the basic principles that you must follow is to focus on providing the user with what he/she came for and at a quality that exceeds that offered by the competition. Therefore, the method of presenting the content (visually and logically clear, and also characteristic and easy to remember) is important, as is its adjustment to the user's expectations (no click baits).

Support content with SEO optimization

In many cases, optimization is the answer to marketers' dilemmas. Well-prepared graphics, professional analysis of key phrases, and a fast-acting website are aspects that will also be useful in content marketing.

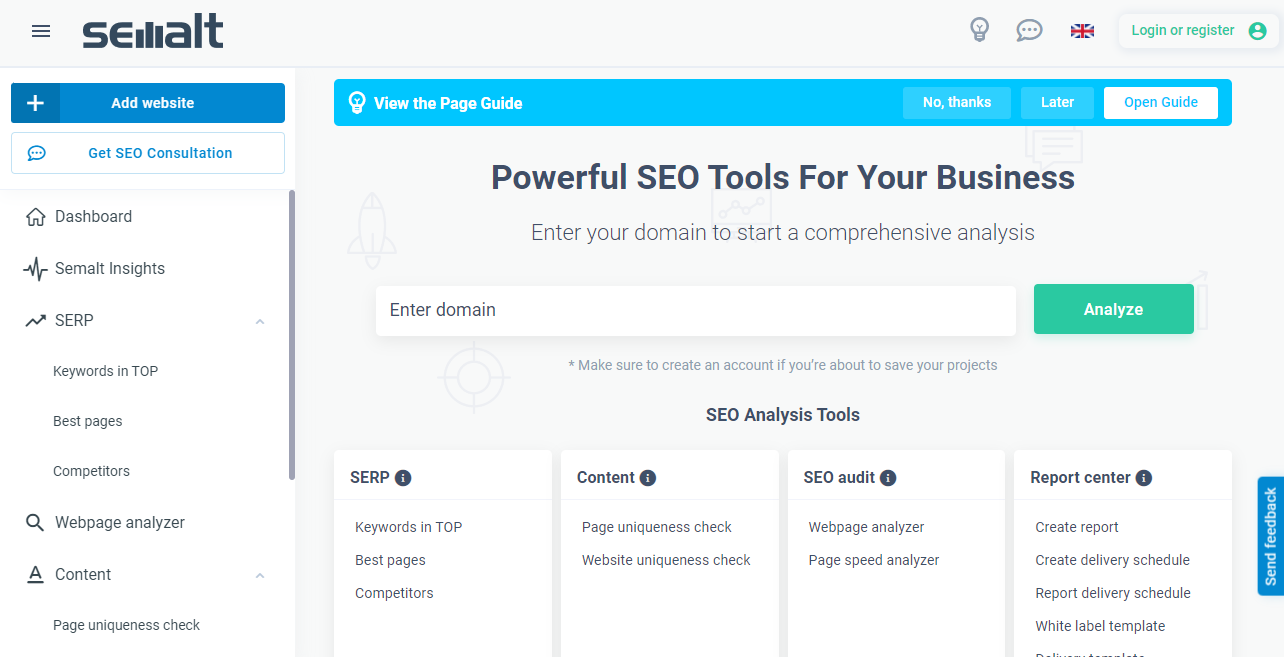

Example: You have created a really fantastic article on a topic related to the activities of your company, but for some reason, it does not display as high as you would like and only brings traffic from social networks. This may mean that it lacks SEO optimization - from key phrase analysis and appropriate SEO title, through headings and alternative texts, to internal linking or schema data. Use this tool, the Dedicated SEO Dashboard, to solve this problem.

Combine planning with RTM and regular evaluation of activities

In 2022, you can't afford any delays - once you know how to choose your content for the year, plan it wisely and well in advance. Take into account possible changes, but avoid a situation where you do not know what to post, so you break the continuity of posts and your blog is silent.

However, leave yourself space for planning in the spirit of Real-Time Marketing and do not fall behind the trends that you will successfully use in sales support. Research what your competitors are doing and see what income each activity brings you.

Make sure your content is varied - find your format

Try to challenge yourself and break away from the most traditional formats by experimenting with podcasts, vlogs, Instagram art series, engaging TikTok videos, and long articles. When planning your content, think about the best way to convey the content: in the form of a movie or text? Maybe it's best to publish both an e-book and an audiobook to broaden your target audience? How to make content more interactive? Pay attention to the trends - the development of video and audio content in 2022 seems unstoppable.

Don't try to be an expert at everything

Find your niche. You must be aware that Internet marketing covers such a wide spectrum of activities that unless you have a whole team of creators under you, project managers, fantastic analysts next to you, and this gigantic budget, it will be very difficult for you to run all strategies in parallel. Remember also that not every medium or trend fits your brand and audience - that's why it is so important to distinguish what is valuable in theory from what will actually help increase brand recognition, traffic, or conversion.

Use content marketing to build your brand

If you are involved in creating content marketing, you certainly know that its goal is to create valuable content for users. It's up to you to schedule your content for the coming year to convert. Also, do not forget that content marketing in a duet with effective storytelling and branding elements is a perfect tool for building brand recognition. Be remembered - change something in the user to make him/her want to come back.

How to choose content for 2022? - summary

How to choose content for 2022? With caution, courage, and flexibility! Do not be afraid of experiments, but always put the user in the center - let him/her draw handfuls from your content, share it freely, and remember your brand for a long time.

If you need to learn more about the subject of SEO and website promotion, we invite you to visit our Semalt blog.

FAQ

Does writing a blog pay off in 2022?

It all depends on how it will be conducted. Personal blogs, led by amateurs, are becoming less and less popular, while corporate and industry blogs are enjoying great popularity.

The text form combined with photos or infographics works great both in order to interest, educate and retain the user, as well as to meet Google's expectations. As a result, it has a positive effect on both the user experience and the position in the SERP.

What content formats should you use in 2022?

The types of content generating the most engagement in 2022 include:

- video (short and long);

- podcasts;

- other forms of audio (e.g. audiobooks);

- live meetings.